Some larger businesses have machines and switches running on 10Gbps networks– CAT 7 or fiber cable and some expensive switches and NICs are all you need, after all. Well, that’s a little beyond my budget (and needs, for that matter), but I’d settle for 2Gbps on my Synology DiskStation web/file/media/&tc. server.

This post outlines my adventures in establishing an aggregated link bond on my Synology Diskstation. Though it’s not extremely detailed as a “how-to” document, it’s detailed enough to lead the user looking for help in the right direction.

Link Aggregation, made possible by the glorious 802.3ad networking standard established by IEEE, is basically the idea of having multiple connections from your machine (desktop, server, NAS, whatever) to a network and combining those connections from two or more physical connections to one virtual connection, sharing the speed of both lines. In other words, if you have a server that has two gigabit-capable NICs, you could, with the right kind of switch, have a theoretical bandwidth of up to two gigabits per second in and out of that machine. If you have a machine with four gigabit-capable NICs, you could have up to four gigabits per second. Not too shabby, eh?

What is required? For starters, you’ll need a machine with two or more NICs– preferably gigabit NICs, but if you’re old school and have one with two 100Mbps NICs, the principle remains the same. You’ll also need an operating system that supports this feature. I’ve never done it before with Windows or Mac, but I believe Windows, Mac, and Linux all standardly support this feature at least in server editions of the OSes. In my example, I’m using a Synology Diskstation 412+ with Synology’s custom Linux variant known as DiskStation Manager 4.3, which very easily supports 802.3ad link aggregation.

Finally, and almost most importantly, you need a switch that supports the 802.3ad standard. I’m not aware of any consumer-grade switches or routers that support this feature; only managed enterprise-level switches will have this as a feature. I searched and searched eBay and found quite a few gigabit POE switches with 802.3ad support (you’ll have to look up the product name on Google and search the switch’s manual PDF or detailed product information for “802.3ad” to verify it’s actually capable of this). All of the switches I wanted, though, were either much too expensive for what I was willing to spend or only 100-megabit switches. Then I found it: the HP ProCurve 2824 for only $25 on a local classified ad site similar to Craigslist. $25! The cheapest I could find this switch used was $230, new for over a thousand. So I snatched it up. You just have to be willing to take your time sometimes.

Now I had all I needed.

With 802.3ad-capable gigabit switch in hand, I connected all of my cables and watched as the pretty lights blinked. I reset the switch and logged in via web browser and set up the initial IP and security settings the way I wanted them. Then I aggregated the two ports to which my Synology DiskStation was connected into a trunk by telnetting into the switch and following these simple instructions. They ought to be similar for other HP switches, and other brand switches can’t be too complicated. Then I aggregated the connections the way I had done previously to test the capabilities (also outlined in the instructions linked beforehand). Even though the DSM control panel shows that you only have a 1000Mbps full duplex link on your aggregated bond, you secretly have up to 2000Mbps full duplex as long as you don’t see any errors in red text next to your speed.

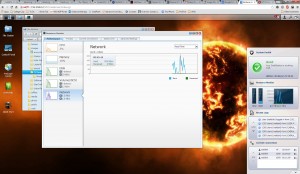

Now came the test. I have two volumes on my DiskStation– one as a 256GB SSD (for websites and internal applications), and another as three terabyte NAS drives in a RAID 0 array. Both of these volumes by their very nature are capable of much faster transfer rates than your regular desktop hard drive. In order to push my server and its aggregated links to their maximum, I copied a 3+ gigabyte Windows 7 installation image to both the SSD and the RAID 0 volumes. Then I had two computers with gigabit NICs on the same network ready and copied one of the files to one computer and one to another at the same time. The result? Pure excellence.

Did you see that?! The maximum bandwidth of both transfers combined was 203 megabytes per second. That’s 1.59 gigabits per second– officially the fastest transfer speed I have ever witnessed in my entire 23.4-year existence. My test was successful.

But why not the full two gigabits? Before I set up link aggregation on the Synology server, I achieved a full gigabit per second of transfer speed when transferring from the RAID 0 array to my desktop machine’s SSD. I achieved, well, about 80-90% of a full gigabit per second of transfer speed going from the RAID 0 array to another desktop’s old IDE drive. Why don’t we see something closer to 1.9 or 2 gigabits per second? Probably due to the following:

- As you can see, the 203MBps speed only spiked for a few seconds. While still ridiculously fast (these 3+ gigabyte files transferred in around thirty seconds), you see the speed fluctuates on my graph. Depending on when I start copying both files (they weren’t initiated at the same time), I’m sure I could see more than 203MBps. The speed usually starts out at around its maximum, sometimes goes a little faster, and then starts to slow down the longer it transfers the file. I don’t know the science behind why this happens instead of simply transferring at 100% of whatever the potential speed is, but I do know that this is normal.

- Transferring two large files instead of just one takes more processing power. Though the server was only using around 40% of its dual-core CPU power, it was still twice as much as when transferring only one file. The 1GB of RAM and its speed is probably also coming into play– probably even moreso than the higher CPU usage (I’m planning on eventually upgrading that to 2GB of RAM).

- Each point on the graph in that image actually represents about four to seven seconds of time, meaning that the transfer rate during the four or so seconds represented by the point on the graph at the 203MBps mark could have (and likely did) exceed 203MBps. The graph is simply incapable of showing that.

All in all, I am still very pleased. 1.59+ gigabits per second is plenty of speed with room to spare to simultaneously run a web server, access movies and music, and save and access documents and other files.

Depending on your setup– especially how many files you’re accessing from any single drive, whether the drive is an SSD, a RAID 0 array, a RAID 5 array, or JBOD/one disk, and what kind of quality and capability each drive has, one would easily see higher or lower speeds. For example, if I had all four NAS-grade disks in a RAID 0 array instead of three, I know my overall speed would improve since I’d be pulling from four drives at once instead of three. With the six- and eight-drive NASes, you could achieve even better speeds if all drives were in a RAID 0 array, especially if those drives were top-level SSDs, and especially if you were copying to a system just like it! Also, I wager that a person copying a large file from one link-aggregated connection to another would also be able to see much higher speeds depending on his hard drive capabilities on each machine.